mirror of

https://github.com/elastic/kibana.git

synced 2025-04-24 01:38:56 -04:00

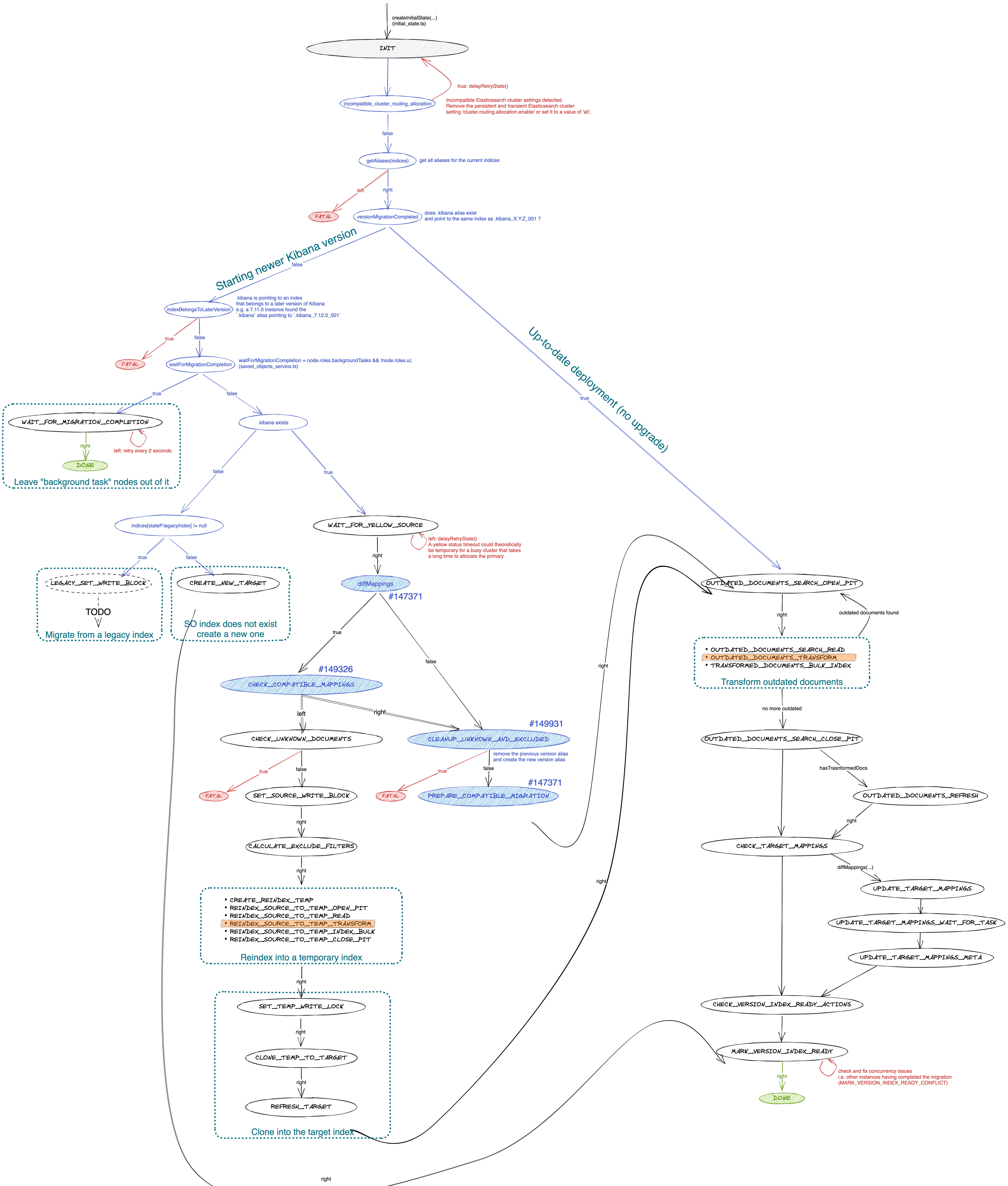

Allow for additive mappings update without creating a new version index (#149326)

Fixes [#147237](https://github.com/elastic/kibana/issues/147237) Based on the same principle as [#147371](https://github.com/elastic/kibana/pull/147371), the goal of this PR is to **avoid reindexing if possible**. This time, the idea is to check whether the new mappings are still compatible with the ones stored in ES. To to so, we attempt to update the mappings in place in the existing index, introducing a new `CHECK_COMPATIBLE_MAPPINGS` step: * If the update operation fails, we assume the mappings are NOT compatible, and we continue with the normal reindexing flow. * If the update operation succeeds, we assume the mappings ARE compatible, and we skip reindexing, just like [#147371](https://github.com/elastic/kibana/pull/147371) does.  --------- Co-authored-by: kibanamachine <42973632+kibanamachine@users.noreply.github.com>

This commit is contained in:

parent

5514f93fc8

commit

5dd8742d17

27 changed files with 1284 additions and 760 deletions

|

|

@ -28,7 +28,7 @@ export {

|

|||

cloneIndex,

|

||||

waitForTask,

|

||||

updateAndPickupMappings,

|

||||

updateTargetMappingsMeta,

|

||||

updateMappings,

|

||||

updateAliases,

|

||||

transformDocs,

|

||||

setWriteBlock,

|

||||

|

|

|

|||

|

|

@ -6,7 +6,8 @@

|

|||

* Side Public License, v 1.

|

||||

*/

|

||||

|

||||

import { type Either, right } from 'fp-ts/lib/Either';

|

||||

import type { Either } from 'fp-ts/lib/Either';

|

||||

import { right } from 'fp-ts/lib/Either';

|

||||

import type { RetryableEsClientError } from './catch_retryable_es_client_errors';

|

||||

import type { DocumentsTransformFailed } from '../core/migrate_raw_docs';

|

||||

|

||||

|

|

@ -37,11 +38,8 @@ export type { CloneIndexResponse, CloneIndexParams } from './clone_index';

|

|||

export { cloneIndex } from './clone_index';

|

||||

|

||||

export type { WaitForIndexStatusParams, IndexNotYellowTimeout } from './wait_for_index_status';

|

||||

import {

|

||||

type IndexNotGreenTimeout,

|

||||

type IndexNotYellowTimeout,

|

||||

waitForIndexStatus,

|

||||

} from './wait_for_index_status';

|

||||

import type { IndexNotGreenTimeout, IndexNotYellowTimeout } from './wait_for_index_status';

|

||||

import { waitForIndexStatus } from './wait_for_index_status';

|

||||

|

||||

export type { WaitForTaskResponse, WaitForTaskCompletionTimeout } from './wait_for_task';

|

||||

import { waitForTask, WaitForTaskCompletionTimeout } from './wait_for_task';

|

||||

|

|

@ -85,8 +83,6 @@ export { createIndex } from './create_index';

|

|||

|

||||

export { checkTargetMappings } from './check_target_mappings';

|

||||

|

||||

export { updateTargetMappingsMeta } from './update_target_mappings_meta';

|

||||

|

||||

export const noop = async (): Promise<Either<never, 'noop'>> => right('noop' as const);

|

||||

|

||||

export type {

|

||||

|

|

@ -95,6 +91,8 @@ export type {

|

|||

} from './update_and_pickup_mappings';

|

||||

export { updateAndPickupMappings } from './update_and_pickup_mappings';

|

||||

|

||||

export { updateMappings } from './update_mappings';

|

||||

|

||||

import type { UnknownDocsFound } from './check_for_unknown_docs';

|

||||

import type { IncompatibleClusterRoutingAllocation } from './initialize_action';

|

||||

import { ClusterShardLimitExceeded } from './create_index';

|

||||

|

|

|

|||

|

|

@ -46,7 +46,7 @@ describe('updateAndPickupMappings', () => {

|

|||

expect(catchRetryableEsClientErrors).toHaveBeenCalledWith(retryableError);

|

||||

});

|

||||

|

||||

it('updates the _mapping properties but not the _meta information', async () => {

|

||||

it('calls the indices.putMapping with the mapping properties as well as the _meta information', async () => {

|

||||

const task = updateAndPickupMappings({

|

||||

client,

|

||||

index: 'new_index',

|

||||

|

|

@ -82,6 +82,13 @@ describe('updateAndPickupMappings', () => {

|

|||

dynamic: false,

|

||||

},

|

||||

},

|

||||

_meta: {

|

||||

migrationMappingPropertyHashes: {

|

||||

references: '7997cf5a56cc02bdc9c93361bde732b0',

|

||||

'epm-packages': '860e23f4404fa1c33f430e6dad5d8fa2',

|

||||

'cases-connector-mappings': '17d2e9e0e170a21a471285a5d845353c',

|

||||

},

|

||||

},

|

||||

});

|

||||

});

|

||||

});

|

||||

|

|

|

|||

|

|

@ -45,13 +45,11 @@ export const updateAndPickupMappings = ({

|

|||

RetryableEsClientError,

|

||||

'update_mappings_succeeded'

|

||||

> = () => {

|

||||

// ._meta property will be updated on a later step

|

||||

const { _meta, ...mappingsWithoutMeta } = mappings;

|

||||

return client.indices

|

||||

.putMapping({

|

||||

index,

|

||||

timeout: DEFAULT_TIMEOUT,

|

||||

...mappingsWithoutMeta,

|

||||

...mappings,

|

||||

})

|

||||

.then(() => {

|

||||

// Ignore `acknowledged: false`. When the coordinating node accepts

|

||||

|

|

|

|||

|

|

@ -0,0 +1,147 @@

|

|||

/*

|

||||

* Copyright Elasticsearch B.V. and/or licensed to Elasticsearch B.V. under one

|

||||

* or more contributor license agreements. Licensed under the Elastic License

|

||||

* 2.0 and the Server Side Public License, v 1; you may not use this file except

|

||||

* in compliance with, at your election, the Elastic License 2.0 or the Server

|

||||

* Side Public License, v 1.

|

||||

*/

|

||||

|

||||

import * as Either from 'fp-ts/lib/Either';

|

||||

import type { TransportResult } from '@elastic/elasticsearch';

|

||||

import { errors as EsErrors } from '@elastic/elasticsearch';

|

||||

import { elasticsearchClientMock } from '@kbn/core-elasticsearch-client-server-mocks';

|

||||

import { catchRetryableEsClientErrors } from './catch_retryable_es_client_errors';

|

||||

import { updateMappings } from './update_mappings';

|

||||

import { DEFAULT_TIMEOUT } from './constants';

|

||||

|

||||

jest.mock('./catch_retryable_es_client_errors');

|

||||

|

||||

describe('updateMappings', () => {

|

||||

beforeEach(() => {

|

||||

jest.clearAllMocks();

|

||||

});

|

||||

|

||||

const createErrorClient = (response: Partial<TransportResult<Record<string, any>>>) => {

|

||||

// Create a mock client that returns the desired response

|

||||

const apiResponse = elasticsearchClientMock.createApiResponse(response);

|

||||

const error = new EsErrors.ResponseError(apiResponse);

|

||||

const client = elasticsearchClientMock.createInternalClient(

|

||||

elasticsearchClientMock.createErrorTransportRequestPromise(error)

|

||||

);

|

||||

|

||||

return { client, error };

|

||||

};

|

||||

|

||||

it('resolves left if the mappings are not compatible (aka 400 illegal_argument_exception from ES)', async () => {

|

||||

const { client } = createErrorClient({

|

||||

statusCode: 400,

|

||||

body: {

|

||||

error: {

|

||||

type: 'illegal_argument_exception',

|

||||

reason: 'mapper [action.actionTypeId] cannot be changed from type [keyword] to [text]',

|

||||

},

|

||||

},

|

||||

});

|

||||

|

||||

const task = updateMappings({

|

||||

client,

|

||||

index: 'new_index',

|

||||

mappings: {

|

||||

properties: {

|

||||

created_at: {

|

||||

type: 'date',

|

||||

},

|

||||

},

|

||||

_meta: {

|

||||

migrationMappingPropertyHashes: {

|

||||

references: '7997cf5a56cc02bdc9c93361bde732b0',

|

||||

'epm-packages': '860e23f4404fa1c33f430e6dad5d8fa2',

|

||||

'cases-connector-mappings': '17d2e9e0e170a21a471285a5d845353c',

|

||||

},

|

||||

},

|

||||

},

|

||||

});

|

||||

|

||||

const res = await task();

|

||||

|

||||

expect(Either.isLeft(res)).toEqual(true);

|

||||

expect(res).toMatchInlineSnapshot(`

|

||||

Object {

|

||||

"_tag": "Left",

|

||||

"left": Object {

|

||||

"type": "incompatible_mapping_exception",

|

||||

},

|

||||

}

|

||||

`);

|

||||

});

|

||||

|

||||

it('calls catchRetryableEsClientErrors when the promise rejects', async () => {

|

||||

const { client, error: retryableError } = createErrorClient({

|

||||

statusCode: 503,

|

||||

body: { error: { type: 'es_type', reason: 'es_reason' } },

|

||||

});

|

||||

|

||||

const task = updateMappings({

|

||||

client,

|

||||

index: 'new_index',

|

||||

mappings: {

|

||||

properties: {

|

||||

created_at: {

|

||||

type: 'date',

|

||||

},

|

||||

},

|

||||

_meta: {},

|

||||

},

|

||||

});

|

||||

try {

|

||||

await task();

|

||||

} catch (e) {

|

||||

/** ignore */

|

||||

}

|

||||

|

||||

expect(catchRetryableEsClientErrors).toHaveBeenCalledWith(retryableError);

|

||||

});

|

||||

|

||||

it('updates the mapping information of the desired index', async () => {

|

||||

const client = elasticsearchClientMock.createInternalClient();

|

||||

|

||||

const task = updateMappings({

|

||||

client,

|

||||

index: 'new_index',

|

||||

mappings: {

|

||||

properties: {

|

||||

created_at: {

|

||||

type: 'date',

|

||||

},

|

||||

},

|

||||

_meta: {

|

||||

migrationMappingPropertyHashes: {

|

||||

references: '7997cf5a56cc02bdc9c93361bde732b0',

|

||||

'epm-packages': '860e23f4404fa1c33f430e6dad5d8fa2',

|

||||

'cases-connector-mappings': '17d2e9e0e170a21a471285a5d845353c',

|

||||

},

|

||||

},

|

||||

},

|

||||

});

|

||||

|

||||

const res = await task();

|

||||

expect(Either.isRight(res)).toBe(true);

|

||||

expect(client.indices.putMapping).toHaveBeenCalledTimes(1);

|

||||

expect(client.indices.putMapping).toHaveBeenCalledWith({

|

||||

index: 'new_index',

|

||||

timeout: DEFAULT_TIMEOUT,

|

||||

properties: {

|

||||

created_at: {

|

||||

type: 'date',

|

||||

},

|

||||

},

|

||||

_meta: {

|

||||

migrationMappingPropertyHashes: {

|

||||

references: '7997cf5a56cc02bdc9c93361bde732b0',

|

||||

'epm-packages': '860e23f4404fa1c33f430e6dad5d8fa2',

|

||||

'cases-connector-mappings': '17d2e9e0e170a21a471285a5d845353c',

|

||||

},

|

||||

},

|

||||

});

|

||||

});

|

||||

});

|

||||

|

|

@ -0,0 +1,63 @@

|

|||

/*

|

||||

* Copyright Elasticsearch B.V. and/or licensed to Elasticsearch B.V. under one

|

||||

* or more contributor license agreements. Licensed under the Elastic License

|

||||

* 2.0 and the Server Side Public License, v 1; you may not use this file except

|

||||

* in compliance with, at your election, the Elastic License 2.0 or the Server

|

||||

* Side Public License, v 1.

|

||||

*/

|

||||

|

||||

import * as Either from 'fp-ts/lib/Either';

|

||||

import * as TaskEither from 'fp-ts/lib/TaskEither';

|

||||

import type { ElasticsearchClient } from '@kbn/core-elasticsearch-server';

|

||||

import type { IndexMapping } from '@kbn/core-saved-objects-base-server-internal';

|

||||

import { catchRetryableEsClientErrors } from './catch_retryable_es_client_errors';

|

||||

import type { RetryableEsClientError } from './catch_retryable_es_client_errors';

|

||||

import { DEFAULT_TIMEOUT } from './constants';

|

||||

|

||||

/** @internal */

|

||||

export interface UpdateMappingsParams {

|

||||

client: ElasticsearchClient;

|

||||

index: string;

|

||||

mappings: IndexMapping;

|

||||

}

|

||||

|

||||

/** @internal */

|

||||

export interface IncompatibleMappingException {

|

||||

type: 'incompatible_mapping_exception';

|

||||

}

|

||||

|

||||

/**

|

||||

* Updates an index's mappings and runs an pickupUpdatedMappings task so that the mapping

|

||||

* changes are "picked up". Returns a taskId to track progress.

|

||||

*/

|

||||

export const updateMappings = ({

|

||||

client,

|

||||

index,

|

||||

mappings,

|

||||

}: UpdateMappingsParams): TaskEither.TaskEither<

|

||||

RetryableEsClientError | IncompatibleMappingException,

|

||||

'update_mappings_succeeded'

|

||||

> => {

|

||||

return () => {

|

||||

return client.indices

|

||||

.putMapping({

|

||||

index,

|

||||

timeout: DEFAULT_TIMEOUT,

|

||||

...mappings,

|

||||

})

|

||||

.then(() => Either.right('update_mappings_succeeded' as const))

|

||||

.catch((res) => {

|

||||

const errorType = res?.body?.error?.type;

|

||||

// ES throws this exact error when attempting to make incompatible updates to the mappigns

|

||||

if (

|

||||

res?.statusCode === 400 &&

|

||||

(errorType === 'illegal_argument_exception' ||

|

||||

errorType === 'strict_dynamic_mapping_exception' ||

|

||||

errorType === 'mapper_parsing_exception')

|

||||

) {

|

||||

return Either.left({ type: 'incompatible_mapping_exception' });

|

||||

}

|

||||

return catchRetryableEsClientErrors(res);

|

||||

});

|

||||

};

|

||||

};

|

||||

|

|

@ -1,80 +0,0 @@

|

|||

/*

|

||||

* Copyright Elasticsearch B.V. and/or licensed to Elasticsearch B.V. under one

|

||||

* or more contributor license agreements. Licensed under the Elastic License

|

||||

* 2.0 and the Server Side Public License, v 1; you may not use this file except

|

||||

* in compliance with, at your election, the Elastic License 2.0 or the Server

|

||||

* Side Public License, v 1.

|

||||

*/

|

||||

|

||||

import { catchRetryableEsClientErrors } from './catch_retryable_es_client_errors';

|

||||

import { errors as EsErrors } from '@elastic/elasticsearch';

|

||||

import { elasticsearchClientMock } from '@kbn/core-elasticsearch-client-server-mocks';

|

||||

import { updateTargetMappingsMeta } from './update_target_mappings_meta';

|

||||

import { DEFAULT_TIMEOUT } from './constants';

|

||||

|

||||

jest.mock('./catch_retryable_es_client_errors');

|

||||

|

||||

describe('updateTargetMappingsMeta', () => {

|

||||

beforeEach(() => {

|

||||

jest.clearAllMocks();

|

||||

});

|

||||

|

||||

// Create a mock client that rejects all methods with a 503 status code

|

||||

// response.

|

||||

const retryableError = new EsErrors.ResponseError(

|

||||

elasticsearchClientMock.createApiResponse({

|

||||

statusCode: 503,

|

||||

body: { error: { type: 'es_type', reason: 'es_reason' } },

|

||||

})

|

||||

);

|

||||

const client = elasticsearchClientMock.createInternalClient(

|

||||

elasticsearchClientMock.createErrorTransportRequestPromise(retryableError)

|

||||

);

|

||||

|

||||

it('calls catchRetryableEsClientErrors when the promise rejects', async () => {

|

||||

const task = updateTargetMappingsMeta({

|

||||

client,

|

||||

index: 'new_index',

|

||||

meta: {},

|

||||

});

|

||||

try {

|

||||

await task();

|

||||

} catch (e) {

|

||||

/** ignore */

|

||||

}

|

||||

|

||||

expect(catchRetryableEsClientErrors).toHaveBeenCalledWith(retryableError);

|

||||

});

|

||||

|

||||

it('updates the _meta information of the desired index', async () => {

|

||||

const task = updateTargetMappingsMeta({

|

||||

client,

|

||||

index: 'new_index',

|

||||

meta: {

|

||||

migrationMappingPropertyHashes: {

|

||||

references: '7997cf5a56cc02bdc9c93361bde732b0',

|

||||

'epm-packages': '860e23f4404fa1c33f430e6dad5d8fa2',

|

||||

'cases-connector-mappings': '17d2e9e0e170a21a471285a5d845353c',

|

||||

},

|

||||

},

|

||||

});

|

||||

try {

|

||||

await task();

|

||||

} catch (e) {

|

||||

/** ignore */

|

||||

}

|

||||

|

||||

expect(client.indices.putMapping).toHaveBeenCalledTimes(1);

|

||||

expect(client.indices.putMapping).toHaveBeenCalledWith({

|

||||

index: 'new_index',

|

||||

timeout: DEFAULT_TIMEOUT,

|

||||

_meta: {

|

||||

migrationMappingPropertyHashes: {

|

||||

references: '7997cf5a56cc02bdc9c93361bde732b0',

|

||||

'epm-packages': '860e23f4404fa1c33f430e6dad5d8fa2',

|

||||

'cases-connector-mappings': '17d2e9e0e170a21a471285a5d845353c',

|

||||

},

|

||||

},

|

||||

});

|

||||

});

|

||||

});

|

||||

|

|

@ -1,55 +0,0 @@

|

|||

/*

|

||||

* Copyright Elasticsearch B.V. and/or licensed to Elasticsearch B.V. under one

|

||||

* or more contributor license agreements. Licensed under the Elastic License

|

||||

* 2.0 and the Server Side Public License, v 1; you may not use this file except

|

||||

* in compliance with, at your election, the Elastic License 2.0 or the Server

|

||||

* Side Public License, v 1.

|

||||

*/

|

||||

import * as Either from 'fp-ts/lib/Either';

|

||||

import * as TaskEither from 'fp-ts/lib/TaskEither';

|

||||

|

||||

import { ElasticsearchClient } from '@kbn/core-elasticsearch-server';

|

||||

import { IndexMappingMeta } from '@kbn/core-saved-objects-base-server-internal';

|

||||

|

||||

import {

|

||||

catchRetryableEsClientErrors,

|

||||

RetryableEsClientError,

|

||||

} from './catch_retryable_es_client_errors';

|

||||

import { DEFAULT_TIMEOUT } from './constants';

|

||||

|

||||

/** @internal */

|

||||

export interface UpdateTargetMappingsMetaParams {

|

||||

client: ElasticsearchClient;

|

||||

index: string;

|

||||

meta?: IndexMappingMeta;

|

||||

}

|

||||

/**

|

||||

* Updates an index's mappings _meta information

|

||||

*/

|

||||

export const updateTargetMappingsMeta =

|

||||

({

|

||||

client,

|

||||

index,

|

||||

meta,

|

||||

}: UpdateTargetMappingsMetaParams): TaskEither.TaskEither<

|

||||

RetryableEsClientError,

|

||||

'update_mappings_meta_succeeded'

|

||||

> =>

|

||||

() => {

|

||||

return client.indices

|

||||

.putMapping({

|

||||

index,

|

||||

timeout: DEFAULT_TIMEOUT,

|

||||

_meta: meta || {},

|

||||

})

|

||||

.then(() => {

|

||||

// Ignore `acknowledged: false`. When the coordinating node accepts

|

||||

// the new cluster state update but not all nodes have applied the

|

||||

// update within the timeout `acknowledged` will be false. However,

|

||||

// retrying this update will always immediately result in `acknowledged:

|

||||

// true` even if there are still nodes which are falling behind with

|

||||

// cluster state updates.

|

||||

return Either.right('update_mappings_meta_succeeded' as const);

|

||||

})

|

||||

.catch(catchRetryableEsClientErrors);

|

||||

};

|

||||

|

|

@ -107,12 +107,12 @@ export function addExcludedTypesToBoolQuery(

|

|||

|

||||

/**

|

||||

* Add the given clauses to the 'must' of the given query

|

||||

* @param filterClauses the clauses to be added to a 'must'

|

||||

* @param boolQuery the bool query to be enriched

|

||||

* @param mustClauses the clauses to be added to a 'must'

|

||||

* @returns a new query container with the enriched query

|

||||

*/

|

||||

export function addMustClausesToBoolQuery(

|

||||

mustClauses: QueryDslQueryContainer[],

|

||||

filterClauses: QueryDslQueryContainer[],

|

||||

boolQuery?: QueryDslBoolQuery

|

||||

): QueryDslQueryContainer {

|

||||

let must: QueryDslQueryContainer[] = [];

|

||||

|

|

@ -121,7 +121,7 @@ export function addMustClausesToBoolQuery(

|

|||

must = must.concat(boolQuery.must);

|

||||

}

|

||||

|

||||

must.push(...mustClauses);

|

||||

must.push(...filterClauses);

|

||||

|

||||

return {

|

||||

bool: {

|

||||

|

|

@ -133,8 +133,8 @@ export function addMustClausesToBoolQuery(

|

|||

|

||||

/**

|

||||

* Add the given clauses to the 'must_not' of the given query

|

||||

* @param boolQuery the bool query to be enriched

|

||||

* @param filterClauses the clauses to be added to a 'must_not'

|

||||

* @param boolQuery the bool query to be enriched

|

||||

* @returns a new query container with the enriched query

|

||||

*/

|

||||

export function addMustNotClausesToBoolQuery(

|

||||

|

|

|

|||

|

|

@ -13,6 +13,7 @@ import type { IndexMapping } from '@kbn/core-saved-objects-base-server-internal'

|

|||

import type {

|

||||

BaseState,

|

||||

CalculateExcludeFiltersState,

|

||||

UpdateSourceMappingsState,

|

||||

CheckTargetMappingsState,

|

||||

CheckUnknownDocumentsState,

|

||||

CheckVersionIndexReadyActions,

|

||||

|

|

@ -1298,13 +1299,12 @@ describe('migrations v2 model', () => {

|

|||

sourceIndexMappings: actualMappings,

|

||||

};

|

||||

|

||||

test('WAIT_FOR_YELLOW_SOURCE -> CHECK_UNKNOWN_DOCUMENTS', () => {

|

||||

test('WAIT_FOR_YELLOW_SOURCE -> UPDATE_SOURCE_MAPPINGS', () => {

|

||||

const res: ResponseType<'WAIT_FOR_YELLOW_SOURCE'> = Either.right({});

|

||||

const newState = model(changedMappingsState, res);

|

||||

expect(newState.controlState).toEqual('CHECK_UNKNOWN_DOCUMENTS');

|

||||

|

||||

expect(newState).toMatchObject({

|

||||

controlState: 'CHECK_UNKNOWN_DOCUMENTS',

|

||||

controlState: 'UPDATE_SOURCE_MAPPINGS',

|

||||

sourceIndex: Option.some('.kibana_7.11.0_001'),

|

||||

sourceIndexMappings: actualMappings,

|

||||

});

|

||||

|

|

@ -1330,6 +1330,49 @@ describe('migrations v2 model', () => {

|

|||

});

|

||||

});

|

||||

|

||||

describe('UPDATE_SOURCE_MAPPINGS', () => {

|

||||

const checkCompatibleMappingsState: UpdateSourceMappingsState = {

|

||||

...baseState,

|

||||

controlState: 'UPDATE_SOURCE_MAPPINGS',

|

||||

sourceIndex: Option.some('.kibana_7.11.0_001') as Option.Some<string>,

|

||||

sourceIndexMappings: baseState.targetIndexMappings,

|

||||

aliases: {

|

||||

'.kibana': '.kibana_7.11.0_001',

|

||||

'.kibana_7.11.0': '.kibana_7.11.0_001',

|

||||

},

|

||||

};

|

||||

|

||||

describe('if action succeeds', () => {

|

||||

test('UPDATE_SOURCE_MAPPINGS -> CLEANUP_UNKNOWN_AND_EXCLUDED', () => {

|

||||

const res: ResponseType<'UPDATE_SOURCE_MAPPINGS'> = Either.right(

|

||||

'update_mappings_succeeded' as const

|

||||

);

|

||||

const newState = model(checkCompatibleMappingsState, res);

|

||||

|

||||

expect(newState).toMatchObject({

|

||||

controlState: 'CLEANUP_UNKNOWN_AND_EXCLUDED',

|

||||

targetIndex: '.kibana_7.11.0_001',

|

||||

versionIndexReadyActions: Option.none,

|

||||

});

|

||||

});

|

||||

});

|

||||

|

||||

describe('if action fails', () => {

|

||||

test('UPDATE_SOURCE_MAPPINGS -> CHECK_UNKNOWN_DOCUMENTS', () => {

|

||||

const res: ResponseType<'UPDATE_SOURCE_MAPPINGS'> = Either.left({

|

||||

type: 'incompatible_mapping_exception',

|

||||

});

|

||||

const newState = model(checkCompatibleMappingsState, res);

|

||||

|

||||

expect(newState).toMatchObject({

|

||||

controlState: 'CHECK_UNKNOWN_DOCUMENTS',

|

||||

sourceIndex: Option.some('.kibana_7.11.0_001'),

|

||||

sourceIndexMappings: baseState.targetIndexMappings,

|

||||

});

|

||||

});

|

||||

});

|

||||

});

|

||||

|

||||

describe('CLEANUP_UNKNOWN_AND_EXCLUDED', () => {

|

||||

const cleanupUnknownAndExcluded: CleanupUnknownAndExcluded = {

|

||||

...baseState,

|

||||

|

|

@ -2693,7 +2736,7 @@ describe('migrations v2 model', () => {

|

|||

|

||||

test('UPDATE_TARGET_MAPPINGS_META -> CHECK_VERSION_INDEX_READY_ACTIONS if the mapping _meta information is successfully updated', () => {

|

||||

const res: ResponseType<'UPDATE_TARGET_MAPPINGS_META'> = Either.right(

|

||||

'update_mappings_meta_succeeded'

|

||||

'update_mappings_succeeded'

|

||||

);

|

||||

const newState = model(updateTargetMappingsMetaState, res) as CheckVersionIndexReadyActions;

|

||||

expect(newState.controlState).toBe('CHECK_VERSION_INDEX_READY_ACTIONS');

|

||||

|

|

|

|||

|

|

@ -424,7 +424,6 @@ export const model = (currentState: State, resW: ResponseType<AllActionStates>):

|

|||

} else if (stateP.controlState === 'WAIT_FOR_YELLOW_SOURCE') {

|

||||

const res = resW as ExcludeRetryableEsError<ResponseType<typeof stateP.controlState>>;

|

||||

if (Either.isRight(res)) {

|

||||

// check the existing mappings to see if we can avoid reindexing

|

||||

if (

|

||||

// source exists

|

||||

Boolean(stateP.sourceIndexMappings._meta?.migrationMappingPropertyHashes) &&

|

||||

|

|

@ -434,9 +433,9 @@ export const model = (currentState: State, resW: ResponseType<AllActionStates>):

|

|||

stateP.sourceIndexMappings,

|

||||

/* expected */

|

||||

stateP.targetIndexMappings

|

||||

) &&

|

||||

Math.random() < 10

|

||||

)

|

||||

) {

|

||||

// the existing mappings match, we can avoid reindexing

|

||||

return {

|

||||

...stateP,

|

||||

controlState: 'CLEANUP_UNKNOWN_AND_EXCLUDED',

|

||||

|

|

@ -446,7 +445,7 @@ export const model = (currentState: State, resW: ResponseType<AllActionStates>):

|

|||

} else {

|

||||

return {

|

||||

...stateP,

|

||||

controlState: 'CHECK_UNKNOWN_DOCUMENTS',

|

||||

controlState: 'UPDATE_SOURCE_MAPPINGS',

|

||||

};

|

||||

}

|

||||

} else if (Either.isLeft(res)) {

|

||||

|

|

@ -465,6 +464,28 @@ export const model = (currentState: State, resW: ResponseType<AllActionStates>):

|

|||

} else {

|

||||

return throwBadResponse(stateP, res);

|

||||

}

|

||||

} else if (stateP.controlState === 'UPDATE_SOURCE_MAPPINGS') {

|

||||

const res = resW as ExcludeRetryableEsError<ResponseType<typeof stateP.controlState>>;

|

||||

if (Either.isRight(res)) {

|

||||

return {

|

||||

...stateP,

|

||||

controlState: 'CLEANUP_UNKNOWN_AND_EXCLUDED',

|

||||

targetIndex: stateP.sourceIndex.value!, // We preserve the same index, source == target (E.g: ".xx8.7.0_001")

|

||||

versionIndexReadyActions: Option.none,

|

||||

};

|

||||

} else if (Either.isLeft(res)) {

|

||||

const left = res.left;

|

||||

if (isTypeof(left, 'incompatible_mapping_exception')) {

|

||||

return {

|

||||

...stateP,

|

||||

controlState: 'CHECK_UNKNOWN_DOCUMENTS',

|

||||

};

|

||||

} else {

|

||||

return throwBadResponse(stateP, left as never);

|

||||

}

|

||||

} else {

|

||||

return throwBadResponse(stateP, res);

|

||||

}

|

||||

} else if (stateP.controlState === 'CLEANUP_UNKNOWN_AND_EXCLUDED') {

|

||||

const res = resW as ExcludeRetryableEsError<ResponseType<typeof stateP.controlState>>;

|

||||

if (Either.isRight(res)) {

|

||||

|

|

|

|||

|

|

@ -7,44 +7,46 @@

|

|||

*/

|

||||

|

||||

import type { ElasticsearchClient } from '@kbn/core-elasticsearch-server';

|

||||

import { omit } from 'lodash';

|

||||

import type {

|

||||

AllActionStates,

|

||||

ReindexSourceToTempOpenPit,

|

||||

ReindexSourceToTempRead,

|

||||

ReindexSourceToTempClosePit,

|

||||

ReindexSourceToTempTransform,

|

||||

MarkVersionIndexReady,

|

||||

CalculateExcludeFiltersState,

|

||||

UpdateSourceMappingsState,

|

||||

CheckTargetMappingsState,

|

||||

CheckUnknownDocumentsState,

|

||||

CleanupUnknownAndExcluded,

|

||||

CleanupUnknownAndExcludedWaitForTaskState,

|

||||

CloneTempToSource,

|

||||

CreateNewTargetState,

|

||||

CreateReindexTempState,

|

||||

InitState,

|

||||

LegacyCreateReindexTargetState,

|

||||

LegacyDeleteState,

|

||||

LegacyReindexState,

|

||||

LegacyReindexWaitForTaskState,

|

||||

LegacySetWriteBlockState,

|

||||

OutdatedDocumentsTransform,

|

||||

SetSourceWriteBlockState,

|

||||

State,

|

||||

UpdateTargetMappingsState,

|

||||

UpdateTargetMappingsWaitForTaskState,

|

||||

CreateReindexTempState,

|

||||

MarkVersionIndexReady,

|

||||

MarkVersionIndexReadyConflict,

|

||||

CreateNewTargetState,

|

||||

CloneTempToSource,

|

||||

SetTempWriteBlock,

|

||||

WaitForYellowSourceState,

|

||||

TransformedDocumentsBulkIndex,

|

||||

ReindexSourceToTempIndexBulk,

|

||||

OutdatedDocumentsRefresh,

|

||||

OutdatedDocumentsSearchClosePit,

|

||||

OutdatedDocumentsSearchOpenPit,

|

||||

OutdatedDocumentsSearchRead,

|

||||

OutdatedDocumentsSearchClosePit,

|

||||

RefreshTarget,

|

||||

OutdatedDocumentsRefresh,

|

||||

CheckUnknownDocumentsState,

|

||||

CalculateExcludeFiltersState,

|

||||

WaitForMigrationCompletionState,

|

||||

CheckTargetMappingsState,

|

||||

OutdatedDocumentsTransform,

|

||||

PrepareCompatibleMigration,

|

||||

CleanupUnknownAndExcluded,

|

||||

CleanupUnknownAndExcludedWaitForTaskState,

|

||||

RefreshTarget,

|

||||

ReindexSourceToTempClosePit,

|

||||

ReindexSourceToTempIndexBulk,

|

||||

ReindexSourceToTempOpenPit,

|

||||

ReindexSourceToTempRead,

|

||||

ReindexSourceToTempTransform,

|

||||

SetSourceWriteBlockState,

|

||||

SetTempWriteBlock,

|

||||

State,

|

||||

TransformedDocumentsBulkIndex,

|

||||

UpdateTargetMappingsState,

|

||||

UpdateTargetMappingsWaitForTaskState,

|

||||

WaitForMigrationCompletionState,

|

||||

WaitForYellowSourceState,

|

||||

} from './state';

|

||||

import type { TransformRawDocs } from './types';

|

||||

import * as Actions from './actions';

|

||||

|

|

@ -70,6 +72,12 @@ export const nextActionMap = (client: ElasticsearchClient, transformRawDocs: Tra

|

|||

Actions.fetchIndices({ client, indices: [state.currentAlias, state.versionAlias] }),

|

||||

WAIT_FOR_YELLOW_SOURCE: (state: WaitForYellowSourceState) =>

|

||||

Actions.waitForIndexStatus({ client, index: state.sourceIndex.value, status: 'yellow' }),

|

||||

UPDATE_SOURCE_MAPPINGS: (state: UpdateSourceMappingsState) =>

|

||||

Actions.updateMappings({

|

||||

client,

|

||||

index: state.sourceIndex.value, // attempt to update source mappings in-place

|

||||

mappings: omit(state.targetIndexMappings, ['_meta']), // ._meta property will be updated on a later step

|

||||

}),

|

||||

CLEANUP_UNKNOWN_AND_EXCLUDED: (state: CleanupUnknownAndExcluded) =>

|

||||

Actions.cleanupUnknownAndExcluded({

|

||||

client,

|

||||

|

|

@ -163,7 +171,7 @@ export const nextActionMap = (client: ElasticsearchClient, transformRawDocs: Tra

|

|||

Actions.updateAndPickupMappings({

|

||||

client,

|

||||

index: state.targetIndex,

|

||||

mappings: state.targetIndexMappings,

|

||||

mappings: omit(state.targetIndexMappings, ['_meta']), // ._meta property will be updated on a later step

|

||||

}),

|

||||

UPDATE_TARGET_MAPPINGS_WAIT_FOR_TASK: (state: UpdateTargetMappingsWaitForTaskState) =>

|

||||

Actions.waitForPickupUpdatedMappingsTask({

|

||||

|

|

@ -172,10 +180,10 @@ export const nextActionMap = (client: ElasticsearchClient, transformRawDocs: Tra

|

|||

timeout: '60s',

|

||||

}),

|

||||

UPDATE_TARGET_MAPPINGS_META: (state: UpdateTargetMappingsState) =>

|

||||

Actions.updateTargetMappingsMeta({

|

||||

Actions.updateMappings({

|

||||

client,

|

||||

index: state.targetIndex,

|

||||

meta: state.targetIndexMappings._meta,

|

||||

mappings: state.targetIndexMappings,

|

||||

}),

|

||||

CHECK_VERSION_INDEX_READY_ACTIONS: () => Actions.noop,

|

||||

OUTDATED_DOCUMENTS_SEARCH_OPEN_PIT: (state: OutdatedDocumentsSearchOpenPit) =>

|

||||

|

|

|

|||

|

|

@ -243,6 +243,13 @@ export interface WaitForYellowSourceState extends BaseWithSource {

|

|||

readonly aliases: Record<string, string | undefined>;

|

||||

}

|

||||

|

||||

export interface UpdateSourceMappingsState extends BaseState {

|

||||

readonly controlState: 'UPDATE_SOURCE_MAPPINGS';

|

||||

readonly sourceIndex: Option.Some<string>;

|

||||

readonly sourceIndexMappings: IndexMapping;

|

||||

readonly aliases: Record<string, string | undefined>;

|

||||

}

|

||||

|

||||

export interface CheckUnknownDocumentsState extends BaseWithSource {

|

||||

/** Check if any unknown document is present in the source index */

|

||||

readonly controlState: 'CHECK_UNKNOWN_DOCUMENTS';

|

||||

|

|

@ -493,6 +500,7 @@ export type State = Readonly<

|

|||

| WaitForMigrationCompletionState

|

||||

| DoneState

|

||||

| WaitForYellowSourceState

|

||||

| UpdateSourceMappingsState

|

||||

| CheckUnknownDocumentsState

|

||||

| SetSourceWriteBlockState

|

||||

| CalculateExcludeFiltersState

|

||||

|

|

|

|||

Binary file not shown.

|

|

@ -24,90 +24,104 @@ async function removeLogFile() {

|

|||

}

|

||||

|

||||

describe('migration from 7.13 to 7.14+ with many failed action_tasks', () => {

|

||||

let esServer: TestElasticsearchUtils;

|

||||

let root: Root;

|

||||

let startES: () => Promise<TestElasticsearchUtils>;

|

||||

describe('if mappings are incompatible (reindex required)', () => {

|

||||

let esServer: TestElasticsearchUtils;

|

||||

let root: Root;

|

||||

let startES: () => Promise<TestElasticsearchUtils>;

|

||||

|

||||

beforeAll(async () => {

|

||||

await removeLogFile();

|

||||

});

|

||||

|

||||

beforeEach(() => {

|

||||

({ startES } = createTestServers({

|

||||

adjustTimeout: (t: number) => jest.setTimeout(t),

|

||||

settings: {

|

||||

es: {

|

||||

license: 'basic',

|

||||

dataArchive: Path.join(__dirname, '..', 'archives', '7.13_1.5k_failed_action_tasks.zip'),

|

||||

},

|

||||

},

|

||||

}));

|

||||

});

|

||||

|

||||

afterEach(async () => {

|

||||

if (root) {

|

||||

await root.shutdown();

|

||||

}

|

||||

if (esServer) {

|

||||

await esServer.stop();

|

||||

}

|

||||

|

||||

await new Promise((resolve) => setTimeout(resolve, 10000));

|

||||

});

|

||||

|

||||

const getCounts = async (

|

||||

kibanaIndexName = '.kibana',

|

||||

taskManagerIndexName = '.kibana_task_manager'

|

||||

): Promise<{ tasksCount: number; actionTaskParamsCount: number }> => {

|

||||

const esClient: ElasticsearchClient = esServer.es.getClient();

|

||||

|

||||

const actionTaskParamsResponse = await esClient.count({

|

||||

index: kibanaIndexName,

|

||||

body: {

|

||||

query: {

|

||||

bool: { must: { term: { type: 'action_task_params' } } },

|

||||

},

|

||||

},

|

||||

});

|

||||

const tasksResponse = await esClient.count({

|

||||

index: taskManagerIndexName,

|

||||

body: {

|

||||

query: {

|

||||

bool: { must: { term: { type: 'task' } } },

|

||||

},

|

||||

},

|

||||

beforeAll(async () => {

|

||||

await removeLogFile();

|

||||

});

|

||||

|

||||

return {

|

||||

actionTaskParamsCount: actionTaskParamsResponse.count,

|

||||

tasksCount: tasksResponse.count,

|

||||

beforeEach(() => {

|

||||

({ startES } = createTestServers({

|

||||

adjustTimeout: (t: number) => jest.setTimeout(t),

|

||||

settings: {

|

||||

es: {

|

||||

license: 'basic',

|

||||

dataArchive: Path.join(

|

||||

__dirname,

|

||||

'..',

|

||||

'archives',

|

||||

'7.13_1.5k_failed_action_tasks.zip'

|

||||

),

|

||||

},

|

||||

},

|

||||

}));

|

||||

});

|

||||

|

||||

afterEach(async () => {

|

||||

if (root) {

|

||||

await root.shutdown();

|

||||

}

|

||||

if (esServer) {

|

||||

await esServer.stop();

|

||||

}

|

||||

|

||||

await new Promise((resolve) => setTimeout(resolve, 10000));

|

||||

});

|

||||

|

||||

const getCounts = async (

|

||||

kibanaIndexName = '.kibana',

|

||||

taskManagerIndexName = '.kibana_task_manager'

|

||||

): Promise<{ tasksCount: number; actionTaskParamsCount: number }> => {

|

||||

const esClient: ElasticsearchClient = esServer.es.getClient();

|

||||

|

||||

const actionTaskParamsResponse = await esClient.count({

|

||||

index: kibanaIndexName,

|

||||

body: {

|

||||

query: {

|

||||

bool: { must: { term: { type: 'action_task_params' } } },

|

||||

},

|

||||

},

|

||||

});

|

||||

const tasksResponse = await esClient.count({

|

||||

index: taskManagerIndexName,

|

||||

body: {

|

||||

query: {

|

||||

bool: { must: { term: { type: 'task' } } },

|

||||

},

|

||||

},

|

||||

});

|

||||

|

||||

return {

|

||||

actionTaskParamsCount: actionTaskParamsResponse.count,

|

||||

tasksCount: tasksResponse.count,

|

||||

};

|

||||

};

|

||||

};

|

||||

|

||||

it('filters out all outdated action_task_params and action tasks', async () => {

|

||||

esServer = await startES();

|

||||

it('filters out all outdated action_task_params and action tasks', async () => {

|

||||

esServer = await startES();

|

||||

|

||||

// Verify counts in current index before migration starts

|

||||

expect(await getCounts()).toEqual({

|

||||

actionTaskParamsCount: 2010,

|

||||

tasksCount: 2020,

|

||||

});

|

||||

// Verify counts in current index before migration starts

|

||||

expect(await getCounts()).toEqual({

|

||||

actionTaskParamsCount: 2010,

|

||||

tasksCount: 2020,

|

||||

});

|

||||

|

||||

root = createRoot();

|

||||

await root.preboot();

|

||||

await root.setup();

|

||||

await root.start();

|

||||

root = createRoot();

|

||||

await root.preboot();

|

||||

await root.setup();

|

||||

await root.start();

|

||||

|

||||

// Bulk of tasks should have been filtered out of current index

|

||||

const { actionTaskParamsCount, tasksCount } = await getCounts();

|

||||

// Use toBeLessThan to avoid flakiness in the case that TM starts manipulating docs before the counts are taken

|

||||

expect(actionTaskParamsCount).toBeLessThan(1000);

|

||||

expect(tasksCount).toBeLessThan(1000);

|

||||

// Bulk of tasks should have been filtered out of current index

|

||||

const { actionTaskParamsCount, tasksCount } = await getCounts();

|

||||

// Use toBeLessThan to avoid flakiness in the case that TM starts manipulating docs before the counts are taken

|

||||

expect(actionTaskParamsCount).toBeLessThan(1000);

|

||||

expect(tasksCount).toBeLessThan(1000);

|

||||

|

||||

// Verify that docs were not deleted from old index

|

||||

expect(await getCounts('.kibana_7.13.5_001', '.kibana_task_manager_7.13.5_001')).toEqual({

|

||||

actionTaskParamsCount: 2010,

|

||||

tasksCount: 2020,

|

||||

const {

|

||||

actionTaskParamsCount: oldIndexActionTaskParamsCount,

|

||||

tasksCount: oldIndexTasksCount,

|

||||

} = await getCounts('.kibana_7.13.5_001', '.kibana_task_manager_7.13.5_001');

|

||||

|

||||

// .kibana mappings changes are NOT compatible, we reindex and preserve old index's documents

|

||||

expect(oldIndexActionTaskParamsCount).toEqual(2010);

|

||||

|

||||

// ATM .kibana_task_manager mappings changes are compatible, we skip reindex and actively delete unwanted documents

|

||||

// if the mappings become incompatible in the future, the we will reindex and the old index must still contain all 2020 docs

|

||||

// if the mappings remain compatible, we reuse the existing index and actively delete unwanted documents from it

|

||||

expect(oldIndexTasksCount === 2020 || oldIndexTasksCount < 1000).toEqual(true);

|

||||

});

|

||||

});

|

||||

});

|

||||

|

|

|

|||

|

|

@ -122,30 +122,30 @@ describe('migration v2', () => {

|

|||

|

||||

// 23 saved objects + 14 corrupt (discarded) = 37 total in the old index

|

||||

expect((docs.hits.total as SearchTotalHits).value).toEqual(23);

|

||||

expect(docs.hits.hits.map(({ _id }) => _id)).toEqual([

|

||||

expect(docs.hits.hits.map(({ _id }) => _id).sort()).toEqual([

|

||||

'config:7.13.0',

|

||||

'index-pattern:logs-*',

|

||||

'index-pattern:metrics-*',

|

||||

'ui-metric:console:DELETE_delete',

|

||||

'ui-metric:console:GET_get',

|

||||

'ui-metric:console:GET_search',

|

||||

'ui-metric:console:POST_delete_by_query',

|

||||

'ui-metric:console:POST_index',

|

||||

'ui-metric:console:PUT_indices.put_mapping',

|

||||

'usage-counters:uiCounter:21052021:click:global_search_bar:user_navigated_to_application',

|

||||

'usage-counters:uiCounter:21052021:count:console:GET_cat.aliases',

|

||||

'usage-counters:uiCounter:21052021:loaded:console:opened_app',

|

||||

'usage-counters:uiCounter:21052021:count:console:GET_cat.indices',

|

||||

'usage-counters:uiCounter:21052021:count:global_search_bar:search_focus',

|

||||

'usage-counters:uiCounter:21052021:click:global_search_bar:user_navigated_to_application_unknown',

|

||||

'usage-counters:uiCounter:21052021:count:console:DELETE_delete',

|

||||

'usage-counters:uiCounter:21052021:count:console:GET_cat.aliases',

|

||||

'usage-counters:uiCounter:21052021:count:console:GET_cat.indices',

|

||||

'usage-counters:uiCounter:21052021:count:console:GET_get',

|

||||

'usage-counters:uiCounter:21052021:count:console:GET_search',

|

||||

'usage-counters:uiCounter:21052021:count:console:POST_delete_by_query',

|

||||

'usage-counters:uiCounter:21052021:count:console:POST_index',

|

||||

'usage-counters:uiCounter:21052021:count:console:PUT_indices.put_mapping',

|

||||

'usage-counters:uiCounter:21052021:count:global_search_bar:search_focus',

|

||||

'usage-counters:uiCounter:21052021:count:global_search_bar:search_request',

|

||||

'usage-counters:uiCounter:21052021:count:global_search_bar:shortcut_used',

|

||||

'ui-metric:console:POST_delete_by_query',

|

||||

'usage-counters:uiCounter:21052021:count:console:PUT_indices.put_mapping',

|

||||

'usage-counters:uiCounter:21052021:count:console:POST_delete_by_query',

|

||||

'usage-counters:uiCounter:21052021:count:console:GET_search',

|

||||

'ui-metric:console:PUT_indices.put_mapping',

|

||||

'ui-metric:console:GET_search',

|

||||

'usage-counters:uiCounter:21052021:count:console:DELETE_delete',

|

||||

'ui-metric:console:DELETE_delete',

|

||||

'usage-counters:uiCounter:21052021:count:console:GET_get',

|

||||

'ui-metric:console:GET_get',

|

||||

'usage-counters:uiCounter:21052021:count:console:POST_index',

|

||||

'ui-metric:console:POST_index',

|

||||

'usage-counters:uiCounter:21052021:loaded:console:opened_app',

|

||||

]);

|

||||

});

|

||||

});

|

||||

|

|

|

|||

|

|

@ -8,12 +8,10 @@

|

|||

|

||||

import Path from 'path';

|

||||

import fs from 'fs/promises';

|

||||

import JSON5 from 'json5';

|

||||

import { Env } from '@kbn/config';

|

||||

import { REPO_ROOT } from '@kbn/repo-info';

|

||||

import { getEnvOptions } from '@kbn/config-mocks';

|

||||

import { Root } from '@kbn/core-root-server-internal';

|

||||

import { LogRecord } from '@kbn/logging';

|

||||

import {

|

||||

createRootWithCorePlugins,

|

||||

createTestServers,

|

||||

|

|

@ -23,30 +21,14 @@ import { delay } from '../test_utils';

|

|||

|

||||

const logFilePath = Path.join(__dirname, 'check_target_mappings.log');

|

||||

|

||||

async function removeLogFile() {

|

||||

// ignore errors if it doesn't exist

|

||||

await fs.unlink(logFilePath).catch(() => void 0);

|

||||

}

|

||||

|

||||

async function parseLogFile() {

|

||||

const logFileContent = await fs.readFile(logFilePath, 'utf-8');

|

||||

|

||||

return logFileContent

|

||||

.split('\n')

|

||||

.filter(Boolean)

|

||||

.map((str) => JSON5.parse(str)) as LogRecord[];

|

||||

}

|

||||

|

||||

function logIncludes(logs: LogRecord[], message: string): boolean {

|

||||

return Boolean(logs?.find((rec) => rec.message.includes(message)));

|

||||

}

|

||||

|

||||

describe('migration v2 - CHECK_TARGET_MAPPINGS', () => {

|

||||

let esServer: TestElasticsearchUtils;

|

||||

let root: Root;

|

||||

let logs: LogRecord[];

|

||||

let logs: string;

|

||||

|

||||

beforeEach(async () => await removeLogFile());

|

||||

beforeEach(async () => {

|

||||

await fs.unlink(logFilePath).catch(() => {});

|

||||

});

|

||||

|

||||

afterEach(async () => {

|

||||

await root?.shutdown();

|

||||

|

|

@ -71,9 +53,10 @@ describe('migration v2 - CHECK_TARGET_MAPPINGS', () => {

|

|||

await root.start();

|

||||

|

||||

// Check for migration steps present in the logs

|

||||

logs = await parseLogFile();

|

||||

expect(logIncludes(logs, 'CREATE_NEW_TARGET')).toEqual(true);

|

||||

expect(logIncludes(logs, 'CHECK_TARGET_MAPPINGS')).toEqual(false);

|

||||

logs = await fs.readFile(logFilePath, 'utf-8');

|

||||

|

||||

expect(logs).toMatch('CREATE_NEW_TARGET');

|

||||

expect(logs).not.toMatch('CHECK_TARGET_MAPPINGS');

|

||||

});

|

||||

|

||||

describe('when the indices are aligned with the stack version', () => {

|

||||

|

|

@ -98,7 +81,7 @@ describe('migration v2 - CHECK_TARGET_MAPPINGS', () => {

|

|||

// stop Kibana and remove logs

|

||||

await root.shutdown();

|

||||

await delay(10);

|

||||

await removeLogFile();

|

||||

await fs.unlink(logFilePath).catch(() => {});

|

||||

|

||||

root = createRoot();

|

||||

await root.preboot();

|

||||

|

|

@ -106,14 +89,12 @@ describe('migration v2 - CHECK_TARGET_MAPPINGS', () => {

|

|||

await root.start();

|

||||

|

||||

// Check for migration steps present in the logs

|

||||

logs = await parseLogFile();

|

||||

expect(logIncludes(logs, 'CREATE_NEW_TARGET')).toEqual(false);

|

||||

expect(

|

||||

logIncludes(logs, 'CHECK_TARGET_MAPPINGS -> CHECK_VERSION_INDEX_READY_ACTIONS')

|

||||

).toEqual(true);

|

||||

expect(logIncludes(logs, 'UPDATE_TARGET_MAPPINGS')).toEqual(false);

|

||||

expect(logIncludes(logs, 'UPDATE_TARGET_MAPPINGS_WAIT_FOR_TASK')).toEqual(false);

|

||||

expect(logIncludes(logs, 'UPDATE_TARGET_MAPPINGS_META')).toEqual(false);

|

||||

logs = await fs.readFile(logFilePath, 'utf-8');

|

||||

expect(logs).not.toMatch('CREATE_NEW_TARGET');

|

||||

expect(logs).toMatch('CHECK_TARGET_MAPPINGS -> CHECK_VERSION_INDEX_READY_ACTIONS');

|

||||

expect(logs).not.toMatch('UPDATE_TARGET_MAPPINGS');

|

||||

expect(logs).not.toMatch('UPDATE_TARGET_MAPPINGS_WAIT_FOR_TASK');

|

||||

expect(logs).not.toMatch('UPDATE_TARGET_MAPPINGS_META');

|

||||

});

|

||||

});

|

||||

|

||||

|

|

@ -140,23 +121,13 @@ describe('migration v2 - CHECK_TARGET_MAPPINGS', () => {

|

|||

await root.start();

|

||||

|

||||

// Check for migration steps present in the logs

|

||||

logs = await parseLogFile();

|

||||

expect(logIncludes(logs, 'CREATE_NEW_TARGET')).toEqual(false);

|

||||

expect(logIncludes(logs, 'CHECK_TARGET_MAPPINGS -> UPDATE_TARGET_MAPPINGS')).toEqual(true);

|

||||

expect(

|

||||

logIncludes(logs, 'UPDATE_TARGET_MAPPINGS -> UPDATE_TARGET_MAPPINGS_WAIT_FOR_TASK')

|

||||

).toEqual(true);

|

||||

expect(

|

||||

logIncludes(logs, 'UPDATE_TARGET_MAPPINGS_WAIT_FOR_TASK -> UPDATE_TARGET_MAPPINGS_META')

|

||||

).toEqual(true);

|

||||

expect(

|

||||

logIncludes(logs, 'UPDATE_TARGET_MAPPINGS_META -> CHECK_VERSION_INDEX_READY_ACTIONS')

|

||||

).toEqual(true);

|

||||

expect(

|

||||

logIncludes(logs, 'CHECK_VERSION_INDEX_READY_ACTIONS -> MARK_VERSION_INDEX_READY')

|

||||

).toEqual(true);

|

||||

expect(logIncludes(logs, 'MARK_VERSION_INDEX_READY -> DONE')).toEqual(true);

|

||||

expect(logIncludes(logs, 'Migration completed')).toEqual(true);

|

||||

logs = await fs.readFile(logFilePath, 'utf-8');

|

||||

expect(logs).not.toMatch('CREATE_NEW_TARGET');

|

||||

expect(logs).toMatch('CHECK_TARGET_MAPPINGS -> UPDATE_TARGET_MAPPINGS');

|

||||

expect(logs).toMatch('UPDATE_TARGET_MAPPINGS -> UPDATE_TARGET_MAPPINGS_WAIT_FOR_TASK');

|

||||

expect(logs).toMatch('UPDATE_TARGET_MAPPINGS_WAIT_FOR_TASK -> UPDATE_TARGET_MAPPINGS_META');

|

||||

expect(logs).toMatch('UPDATE_TARGET_MAPPINGS_META -> CHECK_VERSION_INDEX_READY_ACTIONS');

|

||||

expect(logs).toMatch('Migration completed');

|

||||

});

|

||||

});

|

||||

});

|

||||

|

|

|

|||

|

|

@ -10,28 +10,176 @@ import Path from 'path';

|

|||

import Fs from 'fs';

|

||||

import Util from 'util';

|

||||

import JSON5 from 'json5';

|

||||

import { type TestElasticsearchUtils } from '@kbn/core-test-helpers-kbn-server';

|

||||

import { SavedObjectsType } from '@kbn/core-saved-objects-server';

|

||||

import { ElasticsearchClient } from '@kbn/core-elasticsearch-server';

|

||||

import { getMigrationDocLink, delay } from '../test_utils';

|

||||

import {

|

||||

createTestServers,

|

||||

createRootWithCorePlugins,

|

||||

type TestElasticsearchUtils,

|

||||

} from '@kbn/core-test-helpers-kbn-server';

|

||||

import { Root } from '@kbn/core-root-server-internal';

|

||||

import { getMigrationDocLink } from '../test_utils';

|

||||

clearLog,

|

||||

currentVersion,

|

||||

defaultKibanaIndex,

|

||||

getKibanaMigratorTestKit,

|

||||

nextMinor,

|

||||

startElasticsearch,

|

||||

} from '../kibana_migrator_test_kit';

|

||||

|

||||

const migrationDocLink = getMigrationDocLink().resolveMigrationFailures;

|

||||

const logFilePath = Path.join(__dirname, 'cleanup.log');

|

||||

|

||||

const asyncUnlink = Util.promisify(Fs.unlink);

|

||||

const asyncReadFile = Util.promisify(Fs.readFile);

|

||||

|

||||

async function removeLogFile() {

|

||||

// ignore errors if it doesn't exist

|

||||

await asyncUnlink(logFilePath).catch(() => void 0);

|

||||

}

|

||||

describe('migration v2', () => {

|

||||

let esServer: TestElasticsearchUtils['es'];

|

||||

let esClient: ElasticsearchClient;

|

||||

|

||||

function createRoot() {

|

||||

return createRootWithCorePlugins(

|

||||

beforeAll(async () => {

|

||||

esServer = await startElasticsearch();

|

||||

});

|

||||

|

||||

beforeEach(async () => {

|

||||

esClient = await setupBaseline();

|

||||

await clearLog(logFilePath);

|

||||

});

|

||||

|

||||

it('clean ups if migration fails', async () => {

|

||||

const { migrator, client } = await setupNextMinor();

|

||||

migrator.prepareMigrations();

|

||||

|

||||

await expect(migrator.runMigrations()).rejects.toThrowErrorMatchingInlineSnapshot(`

|

||||

"Unable to complete saved object migrations for the [${defaultKibanaIndex}] index: Migrations failed. Reason: 1 corrupt saved object documents were found: corrupt:2baf4de0-a6d4-11ed-ba5a-39196fc76e60

|

||||

|

||||

To allow migrations to proceed, please delete or fix these documents.

|

||||

Note that you can configure Kibana to automatically discard corrupt documents and transform errors for this migration.

|

||||

Please refer to ${migrationDocLink} for more information."

|

||||

`);

|

||||

|

||||

const logFileContent = await asyncReadFile(logFilePath, 'utf-8');

|

||||

const records = logFileContent

|

||||

.split('\n')

|

||||

.filter(Boolean)

|

||||

.map((str) => JSON5.parse(str));

|

||||

|

||||

const logRecordWithPit = records.find(

|

||||

(rec) => rec.message === `[${defaultKibanaIndex}] REINDEX_SOURCE_TO_TEMP_OPEN_PIT RESPONSE`

|

||||

);

|

||||

|

||||

expect(logRecordWithPit).toBeTruthy();

|

||||

|

||||

const pitId = logRecordWithPit.right.pitId;

|

||||

expect(pitId).toBeTruthy();

|

||||

|

||||

await expect(

|

||||

client.search({

|

||||

body: {

|

||||

pit: { id: pitId },

|

||||

},

|

||||

})

|

||||

// throws an exception that cannot search with closed PIT

|

||||

).rejects.toThrow(/search_phase_execution_exception/);

|

||||

});

|

||||

|

||||

afterEach(async () => {

|

||||

await esClient?.indices.delete({ index: `${defaultKibanaIndex}_${currentVersion}_001` });

|

||||

});

|

||||

|

||||

afterAll(async () => {

|

||||

await esServer?.stop();

|

||||

await delay(10);

|

||||

});

|

||||

});

|

||||

|

||||

const setupBaseline = async () => {

|

||||

const typesCurrent: SavedObjectsType[] = [

|

||||

{

|

||||

name: 'complex',

|

||||

hidden: false,

|

||||

namespaceType: 'agnostic',

|

||||

mappings: {

|

||||

properties: {

|

||||

name: { type: 'text' },

|

||||

value: { type: 'integer' },

|

||||

},

|

||||

},

|

||||

migrations: {},

|

||||

},

|

||||

];

|

||||

|

||||

const savedObjects = [

|

||||

{

|

||||

id: 'complex:4baf4de0-a6d4-11ed-ba5a-39196fc76e60',

|

||||

body: {

|

||||

type: 'complex',

|

||||

complex: {

|

||||

name: 'foo',

|

||||

value: 5,

|

||||

},

|

||||

references: [],

|

||||

coreMigrationVersion: currentVersion,

|

||||

updated_at: '2023-02-07T11:04:44.914Z',

|

||||

created_at: '2023-02-07T11:04:44.914Z',

|

||||

},

|

||||

},

|

||||

{

|

||||

id: 'corrupt:2baf4de0-a6d4-11ed-ba5a-39196fc76e60', // incorrect id => corrupt object

|

||||

body: {

|

||||

type: 'complex',

|

||||

complex: {

|

||||

name: 'bar',

|

||||

value: 3,

|

||||

},

|

||||

references: [],

|

||||

coreMigrationVersion: currentVersion,

|

||||

updated_at: '2023-02-07T11:04:44.914Z',

|

||||

created_at: '2023-02-07T11:04:44.914Z',

|

||||

},

|

||||

},

|

||||

];

|

||||

|

||||

const { migrator: baselineMigrator, client } = await getKibanaMigratorTestKit({

|

||||

types: typesCurrent,

|

||||

logFilePath,

|

||||

});

|

||||

|

||||

baselineMigrator.prepareMigrations();

|

||||

await baselineMigrator.runMigrations();

|

||||

|

||||

// inject corrupt saved objects directly using esClient

|

||||

await Promise.all(

|

||||

savedObjects.map((savedObject) => {

|

||||

client.create({

|

||||

index: defaultKibanaIndex,

|

||||

refresh: 'wait_for',

|

||||

...savedObject,

|

||||

});

|

||||

})

|

||||

);

|

||||

|

||||

return client;

|

||||

};

|

||||

|

||||

const setupNextMinor = async () => {

|

||||

const typesNextMinor: SavedObjectsType[] = [

|

||||

{

|

||||

name: 'complex',

|

||||

hidden: false,

|

||||

namespaceType: 'agnostic',

|

||||

mappings: {

|

||||

properties: {

|

||||

name: { type: 'keyword' },

|

||||

value: { type: 'long' },

|

||||

},

|

||||

},

|

||||

migrations: {

|

||||

[nextMinor]: (doc) => doc,

|

||||

},

|

||||

},

|

||||

];

|

||||

|

||||

const { migrator, client } = await getKibanaMigratorTestKit({

|

||||

types: typesNextMinor,

|

||||

kibanaVersion: nextMinor,

|

||||

logFilePath,

|

||||

settings: {

|

||||

migrations: {

|

||||

skip: false,

|

||||

},

|

||||

|

|

@ -54,102 +202,7 @@ function createRoot() {

|

|||

],

|

||||

},

|

||||

},

|

||||

{

|

||||

oss: true,

|

||||

}

|

||||

);

|

||||

}

|

||||

|

||||

describe('migration v2', () => {

|

||||

let esServer: TestElasticsearchUtils;

|

||||

let root: Root;

|

||||

|

||||

beforeAll(async () => {

|

||||

await removeLogFile();

|

||||

});

|

||||

|

||||

afterAll(async () => {

|

||||

if (root) {

|

||||

await root.shutdown();

|

||||

}

|

||||

if (esServer) {

|

||||

await esServer.stop();

|

||||

}

|

||||

|

||||

await new Promise((resolve) => setTimeout(resolve, 10000));

|

||||

});

|

||||

|

||||

it('clean ups if migration fails', async () => {

|

||||

const { startES } = createTestServers({

|

||||

adjustTimeout: (t: number) => jest.setTimeout(t),

|

||||

settings: {

|

||||

es: {

|

||||

license: 'basic',

|

||||

// original SO:

|

||||

// {

|

||||

// _index: '.kibana_7.13.0_001',

|

||||

// _type: '_doc',

|

||||

// _id: 'index-pattern:test_index*',

|

||||

// _version: 1,

|

||||

// result: 'created',

|

||||

// _shards: { total: 2, successful: 1, failed: 0 },

|

||||

// _seq_no: 0,

|

||||

// _primary_term: 1

|

||||

// }

|

||||

dataArchive: Path.join(__dirname, '..', 'archives', '7.13.0_with_corrupted_so.zip'),

|

||||

},

|

||||

},

|

||||

});

|

||||

|

||||

root = createRoot();

|

||||

|

||||

esServer = await startES();

|

||||

await root.preboot();

|

||||

const coreSetup = await root.setup();

|

||||

|

||||

coreSetup.savedObjects.registerType({

|

||||

name: 'foo',

|

||||

hidden: false,

|

||||

mappings: {

|

||||

properties: {},

|

||||

},

|

||||

namespaceType: 'agnostic',

|

||||

migrations: {

|

||||

'7.14.0': (doc) => doc,

|

||||

},

|

||||

});

|

||||

|

||||

await expect(root.start()).rejects.toThrowErrorMatchingInlineSnapshot(`

|

||||

"Unable to complete saved object migrations for the [.kibana] index: Migrations failed. Reason: 1 corrupt saved object documents were found: index-pattern:test_index*

|

||||

|

||||

To allow migrations to proceed, please delete or fix these documents.

|

||||

Note that you can configure Kibana to automatically discard corrupt documents and transform errors for this migration.

|

||||

Please refer to ${migrationDocLink} for more information."

|

||||

`);

|

||||

|

||||

const logFileContent = await asyncReadFile(logFilePath, 'utf-8');

|

||||

const records = logFileContent

|

||||

.split('\n')

|

||||

.filter(Boolean)

|

||||